Authors

| Ahmed Aldahdooh | Wassim Hamidouche | Olivier Deforges |

| Univ Rennes, INSA Rennes, CNRS, IETR - UMR 6164, F-35000 Rennes, France | ||

| ahmed.aldahdooh@insa-rennes.fr | ||

Paper

Selective and Features based Adversarial Example Detection

Abstract

Security-sensitive applications that relay on Deep Neu-ral Networks (DNNs) are vulnerable to small perturba-tions crafted to generate Adversarial Examples (AEs) thatare imperceptible to human and cause DNN to misclassifythem. Many defense and detection techniques have beenproposed. The state-of-the-art detection techniques havebeen designed for specific attacks or broken by others, needknowledge about the attacks, are not consistent, increasemodel parameters overhead, are time-consuming, or havelatency in inference time. To trade off these factors, we pro-pose a novel unsupervised detection mechanism that usesthe selective prediction, processing model layers outputs,and knowledge transfer concepts in a multi-task learningsetting. It is called Selective and Feature based AdversarialDetection (SFAD). Experimental results show that the pro-posed approach achieves comparable results to the state-of-the-art methods against tested attacks in white, black, andgray boxes scenarios. Moreover, results show that SFAD isfully robust against High Confidence Attackss (HCAs) forMNIST and partially robust for CIFAR-10 datasets.

SFAD:Selective and Feature based AdversarialDetection

Architecture

Detector (High-level Model Archeticture)

The input sample is passed to the CNN model to get outputs of N-last layers to be processed in the detector classifiers. SFAD yields prediction and selective probabilities to determine the prediction class of the inputsample and whether it is adversarial or not.

Detector (Model Archeticture)

|

| It is believed that the lastN-layers in the DNN have potentials in detecting and rejecting AEs. At this very high level of presentation, AEs are indistinguishable from samples of the target class. Unlike other works, in this work, 1) the representative of the last layer outputs Zj, as features, are processed. 2) Multi-Task Learning (MTL) is used. MTL has an advantage of combining related tasks with one or more loss function(s) and it does better generalization especially with the help of the auxiliary functions. 3) selective prediction concept is utilized. In order to build safe DL models, prediction uncertainties,Sqj and St, have to be estimated and a rejection mechanism to control the uncertaintyhas to be identified as well. Here, a Selective and Feature based Adversarial Detection (SFAD) method is demonstrated. As depicted in the Figure, SFAD consists of two main blocks (in grey); the selective AEs classifiers block and the selective knowledge transfer classifier block. Besides the DNN prediction,Pb, the two blocks give as output 1) detector prediction probabilities,Pqj, and Pt, and selective probabilities, Sqj and St. The detection blocks (in red) take these probabilities to identify adversarial status of input x. |

Selective AEs Classifiers

|

| Prediction Task: we process the representative lastN-layer(s) outputs Zj with different ways in order to make clean input features more unique. This will limit the feature space that the adversary uses to craft the AEs. Each of lastN-layer output has its own feature space since the perturbations propagation became clear when DNN model goes deeper. That makes each of the N classifiers to be trained with different feature space. Hence, combining and increasing the number of N will enhance the detection process. The aim of this block isto build N individual classifiers. The Figure shows the architecture of one classifier. The input of each classifier is one or more of N-layers representative outputs. As depicted in the Figure each selective classifier consists of different processing blocks; auto-encoders block,up/down-sampling block, bottleneck block, and noiseblock. These blocks aim at giving distinguishable features for input samples to let the detector recognises the AEs efficiently. Selective Task: The aim of this task is to train the prediction task with the support of selective prediction/rejection as shown in the Figure. The input of the selective task is the last layer representative output of the prediction task qj.The selective task architecture is simple. It consists of onedense layer with ReLU activation and batch normalization(BN) layers followed by special Lambda layer that divides the output of BN by 10. Then it followed by one output dense layer with sigmoid activation. Auxiliary task: In the MTL models, auxiliary task mainly comes to help generalizing the prediction task. Most of the MTL models focus on one main task andother/auxiliary tasks must be related. Our main task in the classifier is to train a selective prediction for the input features and in order to optimize this task, low-level featureshave to be accurate for the prediction and selective tasks and not to be overfitted to one of these tasks. Hence, the original prediction process is considered as an auxiliary task. |

Selective Knowledge Transfere Classifier

|

| The idea behind this block is that each set of inputs (the confidence values of Y classes of j classifier) is considered as a special feature of the clean input and combining different sets of the these features makes the features morerobust. Hence, we transfer this knowledge of clean inputs to the classifier. Besides, in the inference time, we believe that AE will generate different distribution of the confidence values and if it was able to fool one classifier (selective AEs classifier), it may not fool the others. Prediction Task: the output of the prediction task of the selective AEs classifier qj(z) is class prediction values Pqj. These confidence values are concatenated with N outputs from prediction tasks to be as input Q of the selective knowledge transfer block as illustrated in the Figure. Its classifier consists of one or more dense layer(s) and yields confidence values for Y classes Pt. Selective Task: the selectivetask is also integrated in the knowledge transfer classifier to selectively predict/reject AEs. Auxiliary task: Similar to selective AEs classifiers,knowledge transfer classifier has the auxiliary network network, h, that is trained using the same prediction task asassigned to t |

Performance at (FP=10%)

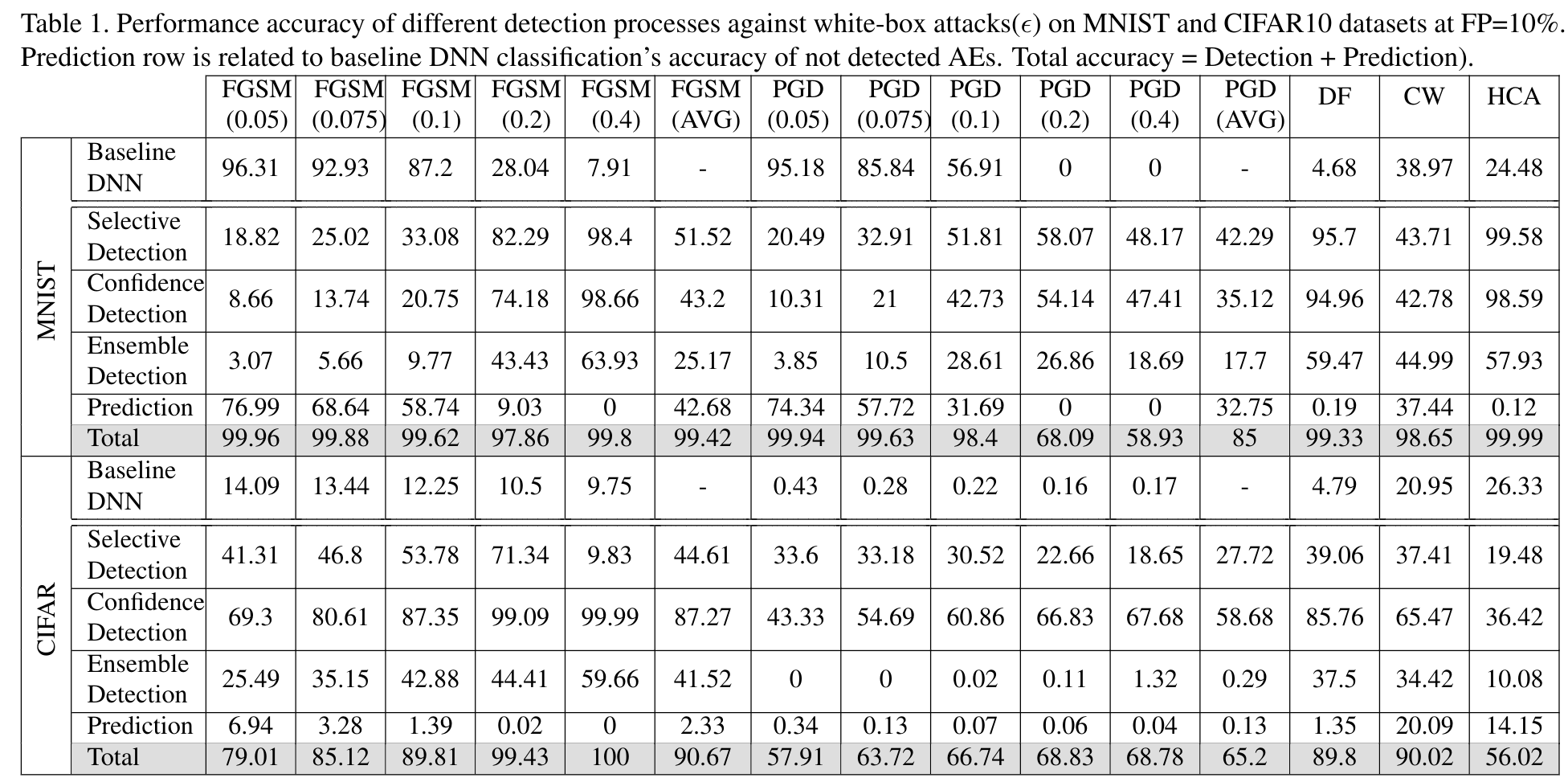

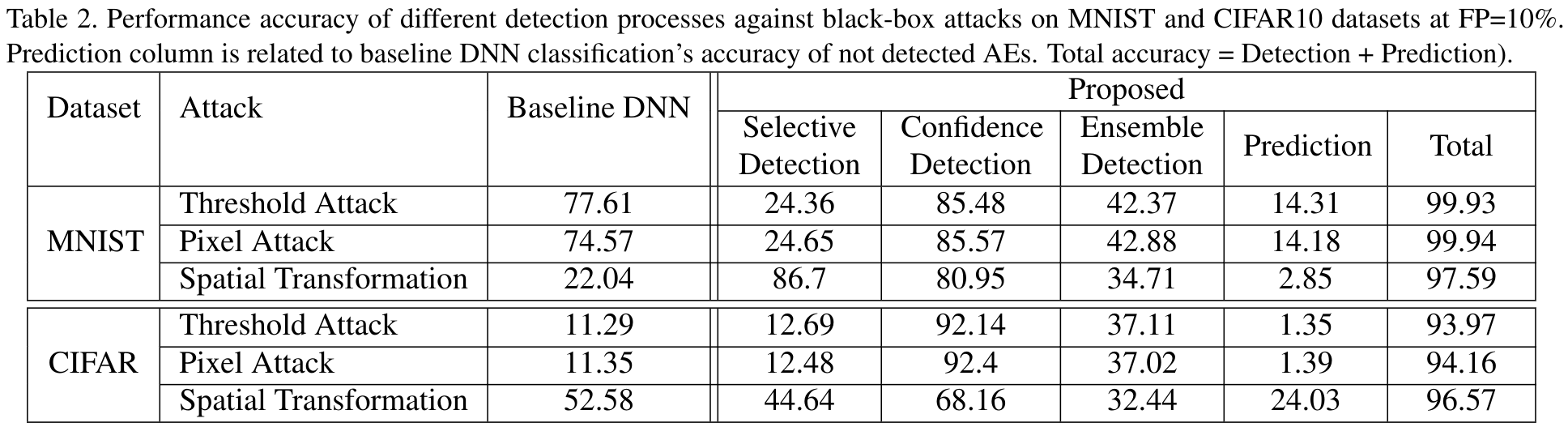

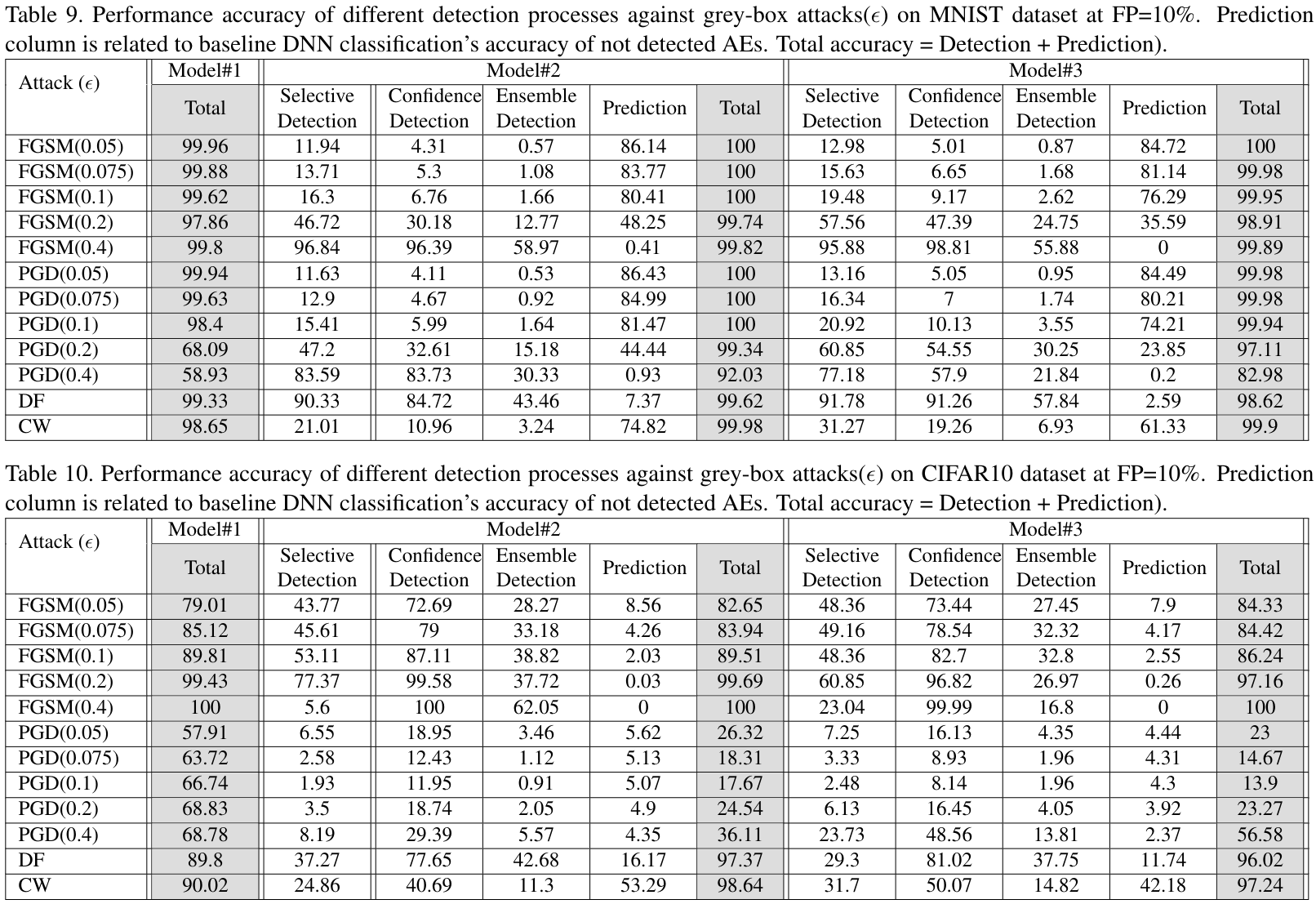

Tables show selective, confidence, and ensemble detection accuracies of SFAD prototype for MNIST and CIFAR10 datasets. It also shows the baseline DNN prediction accuracy for the AEs in “Baseline DNN” row and for the not detected AEs in “prediction” row. The “Total” row is the total accuracy of detected and truly classified/predicted samples.

Performance against white-box attacks

| We tested the proposed model with different typesof attacks. Fast Gradient Sign Method (FGSM), Projected Gradient Descent (PGD), and Carlini-Wagner (CW), DF (DeepFool), and High Confidence Attacks (HCA) attacks are tested. |

|

Performance against black-box attacks

| For the black-box attacks, Threshold Attack (TA), Pixel Attack (PA), and Spatial Transformation attack (ST) attacks are used in the testing process |

|

Performance against grey-box attacks

| Gray-box scenario assumes that we knew only the model training data and the output of the DNN model and we did not know the model architecture. Hence, we trained two models as substitution models named Model#2 andModel#3 for MNIST and CIFAR-10. Then, white-box based AEs are generated using the substitution models. |

|

Citation

@misc{aldahdooh2021selective,

title={Selective and Features based Adversarial Example Detection},

author={Ahmed Aldahdooh and Wassim Hamidouche and Olivier Déforges},

year={2021},

eprint={2103.05354},

archivePrefix={arXiv},

primaryClass={cs.CR}

}